Go vs Python for Web Scraping: What's Better?

by Anas El MhamdiI am a JavaScript and Python developer and always try to learn new things. Go is emerging these past few years as a language to ship code fast and safe. I wanted to give it a try by benchmarking it against Python. My favourite use case is web scraping so this is the use case I used to compare both languages. I also tested execution times and memory consumption.

What are we scraping?

In this article, I will be scraping this Wikipedia page to extract a list of NBA All-Stars and then scrape each player’s page to get basic information about them.

The task involves:

- Getting the list of players from the main page

- Scraping individual player profiles (250+ players)

- Collecting basic player information like height and weight

- Testing both single-threaded and concurrent implementations

- Using proxies for all requests

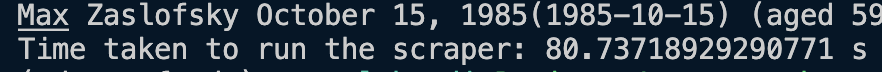

Single-threaded Python

I started with Python because I’m most familiar with it. The code using the requests library is fairly straight forward and concise:

The execution time was approximately 80 seconds.

This is pretty slow but the code is simple to write and understand, which is one of Python’s biggest strengths.

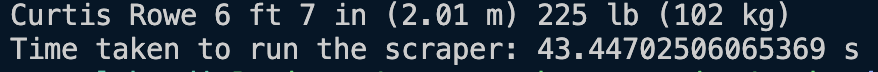

Python with concurrency

To speed things up, I used the aiohttp library to make concurrent requests:

The concurrent version is more verbose and less intuitive than the single-threaded code, but it’s approximately 2x faster, completing in around 40 seconds.

To measure memory consumption, I used the tracemalloc library:

Peak memory consumption was approximately 66 MB.

Starting with Go

Before diving into Go, I had to learn the basics. Here are the resources I used:

- Official Go installation guide

- Getting started tutorial

- The Go tour

- ChatGPT for quick questions

The learning curve was surprisingly manageable coming from Python and JavaScript.

Single-threaded Go

For Go, I used the Colly library, which is similar to Python’s requests library. It uses callbacks to parse HTML:

First, I initialized the project:

go mod init nba_all_stars_scraperThen I implemented the scraper:

The execution time was approximately 16 seconds - that’s about 5x faster than Python!

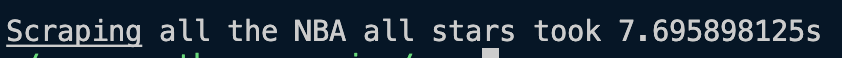

Go with concurrency

Go’s concurrency model uses goroutines and wait groups. Here’s the concurrent implementation:

The key components:

- Goroutines: lightweight threads that enable asynchronous execution

- WaitGroups: synchronize concurrent operations

The concurrent version completed in 7.7 seconds - approximately:

- 2x faster than single-threaded Go

- 5x faster than concurrent Python

- 10x faster than single-threaded Python

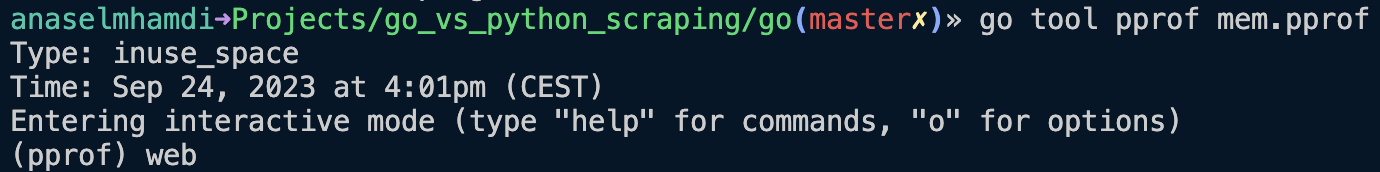

Memory profiling with pprof

Go provides excellent profiling tools through pprof. Here’s how to use it:

After running the program, I analyzed the memory profile:

go tool pprof -http=:8080 mem.profThis opens a visualization in your browser:

The concurrent Go program consumed only 5.5 MB of memory - that’s over 10x more efficient than Python’s 66 MB!

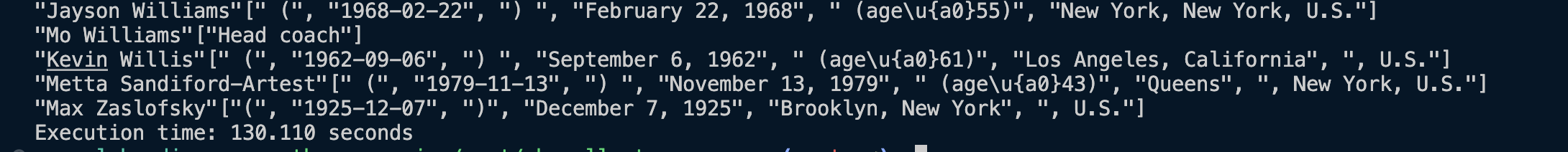

Bonus: Rust comparison

I also briefly tested Rust to see how it compares:

Single-threaded Rust:

- Execution time: 130 seconds (50% slower than Python!)

- Much more verbose than both Go and Python

- Requires explicit typing and memory management

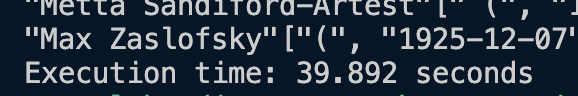

Concurrent Rust:

- Execution time: 39 seconds (about 10% faster than concurrent Python)

- Significantly more complex implementation

- Better suited for CPU-intensive operations

While Rust is a powerful language, its complexity makes it less ideal for I/O-bound tasks like web scraping.

Conclusion

Based on these results, Go is the clear winner for web scraping:

| Language | Single-threaded | Concurrent | Memory Usage |

|---|---|---|---|

| Python | 80s | ~40s | 66 MB |

| Go | ~16s | 7.7s | 5.5 MB |

| Rust | 130s | 39s | N/A |

Go offers:

- Superior performance: 5-10x faster than Python

- Excellent memory efficiency: 10x less memory usage

- Reasonable complexity: Much simpler than Rust

- Great tooling: Built-in profiling and testing tools

I think I have some legacy Python refactoring to do…

You can find all the code for this comparison on GitHub.